By Sofia Osborne

When Jasper Kanes was two years old, their parents took them whale watching for the first time. As is the case for many whale enthusiasts, that first encounter sparked a life-long devotion.

“There are photographs of me with my inflatable whale that I was in love with as a tiny, tiny toddler,” Kanes tells me. “Apparently I cried when my parents deflated it after giving it to me for Christmas so that we could drive home.”

Kanes went on to study whales during their undergraduate degree, starting with research projects for which they would observe whales from boats. “As I learned more about these animals, I realized just how important sound is to their world,” Kanes says. “They see with sound. They communicate with sound. It’s the most important sense to them. So I thought to myself, if I really want to understand them, I need to start looking at sound.”

British Columbia is especially well primed for this type of acoustics-based research, as the province’s coast is believed to house more underwater microphones—or hydrophones—for research purposes than anywhere else in the world. These hydrophones lie below the waves, recording the grunts of fish, the calls of whales and the rumbling of ships 24 hours a day, seven days a week, and producing mountains of data for scientists to comb through.

“It’s become very inexpensive to collect passive acoustic data,” Kanes, now a junior staff scientist for Ocean Networks Canada, explains. “Hard drives are getting really, really cheap. It’s getting easier to do autonomous deployments. And so everybody who’s collecting passive acoustic data has suddenly arrived at this point where we have gigabytes upon gigabytes—some of us have petabytes of data—that we just don’t have the time to look through … It’s very exciting because there’s lots to look at, but it’s a real challenge to figure out how [to] analyze all of this data? So a big push in the community right now is to develop automated tools.”

Such tools utilize artificial intelligence (AI) in similar ways to the speech recognition technology many of us already use, like Apple’s Siri or Amazon’s Alexa, explains Oliver Kirsebom, a senior staff scientist on the Meridian project at the Institute for Big Data Analytics at Dalhousie University. In similar ways to the algorithm-based speech recognition software, speech recognition tech is being used to recognize and analyze whale calls.

“The idea with these algorithms is that you can train them by feeding them examples of, in our case, sound clips,” he says. “So you know if this particular sound clip contains the call of a certain whale or not, and then this neural network learns to determine if that call is present in this sound clip through this training process.”

The possibilities of advancing research into, and management of, whales with this technology are particularly vital in a time when so many whales in the Salish Sea—a stretch of ocean that runs from southern BC into Washington State—are threatened by many factors, including vessel strikes, ship noise and a dwindling food supply.

The Salish Sea is famously home to the southern resident killer whale, an orca ecotype that is critically endangered, with only 75 members remaining. But it is also vital habitat for other species and ecotypes: the marine-mammal-eating transient or Biggs killer whale, the northern resident killer whale, and a recent resurgence of the humpback whale. Being able to know where these whales are in real time, based on alerts from AI derived from hydrophones up and down the coast, could mean the prevention of countless whale deaths going forward, as vessels could be warned to slow down or vacate an area if there are whales present.

There is also tremendous research value in this archive of acoustic data. Certain whale calls can be indicative of particular behaviours, from humpback whales bubble-net feeding (a feeding method that involves vocalization to coordinate a group of whales to circle a group of fish, creating a “net of bubbles” that disorients the fish and signals through a call when the whales should open their mouth and feed) to orca foraging for fish. Often, pods and even families of orca have their own distinct calls, and researchers are able to identify which groups are using certain areas and during which times of the year.

With so many benefits to be gained from collecting and analyzing this data, many organizations, including NGOs, First Nations, government agencies and private companies, have started to set up their own hydrophone projects.

Tom Dakin, an underwater acoustician who installs hydrophones up and down the coast for clients including the BC Coastwide Hydrophone Network—a coalition of NGO hydrophone projects—the Department of Fisheries and Oceans and Transport Canada, says there will soon be 21 hydrophones in the water for the network alone, collecting about 183 terabytes of data per year.

“It’s incredibly expensive to put things under water for long periods of time,” Dakin says. “So the sacrifices and the scrounging that people have done in order to be able to do the research that they’re doing is really awe-inspiring. They’re shining examples to the rest of us of the dedication that people can have.”

Janie Wray, CEO of the North Coast Cetacean Society and Science Director for Orca Lab, helped start the hydrophone network project with Dakin as a way to link the NGO hydrophones along BC’s coast. They also approached First Nations like the Heiltsuk of Bella Bella and the Kitasoo/Xai-xais of Klemtu to set up their own hydrophone projects in their territories.

The goal of the network is to bring all of the hydrophone equipment in the water up to the same level so that the data is comparable across the coast. They ultimately plan to create a website that will display an ocean noise health index, where the public will be able to look at noise levels throughout the province. The data collected by the hydrophones will also be available to anyone for their own research projects.

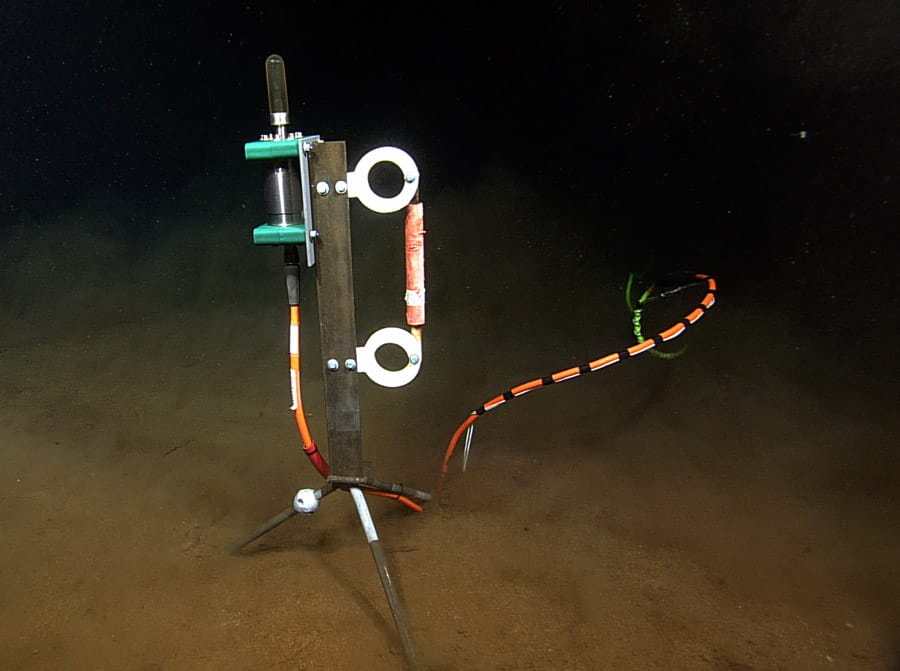

Naxys Hydrophone rests on a tripod after deployment to Cascadia Basin.

Photo courtesy of ONC/CSSF-ROPOS

Photo courtesy of Beamreach

“It’s phenomenal how many hydrophones are in the water,” Wray says. “And it’s great because all these different organizations are listening to whales, we’re all gathering data, but we’re also gathering data on ocean noise … Each hydrophone that we have in the water has an acoustic story to tell us about that habitat.”

As these hydrophone projects grow, so too do the AI projects that will allow scientists to parse through this important data. However, developing and training these algorithms is not without challenges, most notably a lack of annotated data sets—data that has been labelled by a bioacoustician and can be used to teach the AI what to look for.

“Although these algorithms can be trained to do really impressive things, they are kind of dumb too, in the sense that they require just a lot of data before they get to that point,” explains Kirsebom. “Although we keep talking about them as artificial intelligence, they’re still far away from what a human is capable of, which is to recognize a sound having heard just a few examples of that sound. And with a neural network, we have to show it so many examples before it starts to recognize that sound. And even then it will still make mistakes.”

Kanes, who specializes in studying passive acoustics, is one of very few people in the country creating annotated data sets that will be widely available for anyone to use in their projects.

“I’m in a unique position at Ocean Networks Canada to really help move things forward because I’m not being paid to fulfill the requirements of a specific project … I don’t have to keep my data confidential. All of the data I work with are available for anyone to use,” Kanes tells me. “My mandate is really just to help science move forward. So I’m quite free to produce datasets that are very, very public and to promote them to people to use.”

To label the data, Kanes does not usually listen to the recordings; instead, they look at spectrograms—visual representations of the sound data—which show time on the X axis and the frequency of the sound—how high or low the sound is—on the Y axis. With a practised eye, Kanes can recognize the shapes certain calls make in the spectrograms, and more quickly pick out important pieces of data.

“It’s a real treat when I have cause to actually listen to the whales, but if I was to listen to the data we collect in real time, it would take far too long,” Kanes says. “We have more than 20 hydrophones in the water. They’re streaming to us 24 hours a day. There’s no way that I could ever catch up with our data collection if I was listening in real time.”

Another challenge faced by those creating whale AI is the presence of similar sounds that can lead to false positives—when the AI believes it is identifying a whale call but is actually hearing something like a bird call or the sound of a boat winch.

“There’s always going to be new sounds,” Kirsebom tells me. “So even when you’ve trained the network on all sorts of sounds, a new vessel may come by like a ship that makes a sound that the network has never heard before, and again, that’s where the networks sometimes struggle in a way that we humans don’t struggle … We’re not there yet in terms of mimicking our capacity to generalize.”

Kanes tells me about one memorable day when a humpback whale call detector made 300 detections in the span of one hour in the Strait of Georgia. Kanes looked closer at the data, and found that it was actually the shaft rub on a boat’s propeller creating a very convincing humpback-like sound.

“With all of that context, I can look at it and be like, actually that’s shaft rub,” Kanes says. “But … it would be so hard to account for all of the factors that go into my decision [that] actually this isn’t a humpback. ‘Cause it has the right shape, it’s the right timbre—which is sort of like the quality of the sound— it’s the right frequency, it’s the right duration.”

In this way, these whale AI networks are still far behind humans when it comes to analyzing the context around a sound.

“That’s one thing that we’re lacking right now in the algorithms that we’ve been working with, is that knowledge of what happened … a minute ago or 50 minutes ago,” Kirsebom explains. “That’s very challenging.”

While some may fear AI will make humans obsolete, the reality is that this type of whale AI will probably not be replacing the role of bioacousticians. Instead, the two will work together as a team.

“Now that I’m really in it and I see how a lot of these machine learning algorithms function and how well they perform and all of the nuances that confuse them and all of the contexts that I actually use as a human annotator, I’m starting to realize actually, no, I’m probably not going to put myself out of a job,” Kanes says. “These tools will probably make my job easier in that they’ll pick out sounds without me having to go through them.”

As NGOs continue to work on whale AI projects, tech giants are jumping into the fray too. In 2018, at a workshop in Victoria, Google pitched the idea of developing real-time whale detection to Canada’s Department of Fisheries and Oceans (DFO)—the company had already developed similar technology for humpback detection in Washington for the National Oceanic and Atmospheric Administration.

Paul Cottrell, Marine Mammal Coordinator for the DFO, whose team has 20 hydrophones in the water, was excited to partner with Google on this project. They also collaborated with Rainforest Connections, a non-profit tech group that previously worked with Google to create a web interface that would alert wildlife officials when chainsaw sounds were detected in the Amazon Rainforest.

“It’s just been incredible,” Cotrell says. “To actually pay to have this put in place would have been so expensive; if we had to do it without these collaborations, it wouldn’t have been possible. So this really only happened because of this Google team of amazing engineers that could put this cutting-edge programming together.”

Cottrell and his team have been provided with Google phones which notify them when the AI has picked up an alert at one of their stations. They can then listen to the hydrophones to determine if what the AI has detected is indeed a whale call. The DFO network utilizes experts in the field to identify the calls, pinpointing not just what species and ecotype of whale has been heard, but often even what pod the whale may belong to. This information is fed back into the AI, allowing it to improve constantly.

While the AI is still in its training phase, the hope is that it will soon be used to make real-time management decisions. For example, if the DFO knows that a large pod of southern residents is moving through a pass, they can alert vessels to slow down or avoid the area.

On top of their ability to prevent vessel strikes, Cottrell believes this AI could be a key tool if the area was to experience an oil spill. “If there ever was a catastrophic or a large, significant spill in the Gulf Islands, we would be able to know where the whales are,” he says. “If we knew the spill trajectory we could get in front of that and prevent the whales from moving into an area to get exposed. So that’s another big piece of this project is to look at this threat minimization from anthropogenic threats.”

South of the border, other tech companies have been lending their programming prowess to the fight as well. In the summer of 2019, at a Microsoft hackathon in Seattle, a group of Microsoft employees began working on another algorithm to detect whale calls in real time. The project, called Orca Hello, has been in development ever since, with the team volunteering in their spare time to design and train the algorithm. It has now been deployed on three hydrophones in Washington run by the non-profit Orca Sound.

“We didn’t believe it till we saw it with our own eyes,” says Prakruti Gogia, a Microsoft software engineer who works on the HoloLens smart glasses, and a member of the Orca Hello team. “To actually see it work live, that was a really, really incredible experience.”

The algorithm was deployed in late September, 2020, and it sent its first real-time alert a few days later. “The first thing it actually got were a couple of false positives,” Gogia says.

“Otter squeaks!” Gogia’s teammate Akash Mahajan adds excitedly. Mahajan, a Microsoft applied scientist, says that the speech recognition AI he works on for his day job is usually trained on tens of thousands of hours of labelled data. Orca Hello was trained on just fifteen.

“From the beginning … we wanted to create a prototype or a reference design for a system that by design has a feedback loop,” he says. “So the way we’ve set up our system is that we start with some small amount, but we can turn on those hydrophones and when false positives and things come back in they’re exactly from what we need, and we can use that to refine and continue to improve.”

Soon the AI was picking up the calls of orcas and even humpbacks.

“The very first time we heard humpbacks and the system actually picked up detected humpback calls in the audio, that was an incredible moment because they’re really, really distinctive calls, and so echoey,” Gogia says. “It was amazing for me. Like that was the wow moment.”

Scott Veirs, who co-founded Orca Sound with his father Val, calls these spectacular audio events “live concerts,” and views them as a way to forge a connection between humans and whales that will—he hopes—result in an increase in empathy for, and activism around, the plight of the whales, particularly the southern resident killer whales. That is the ethos behind Orca Sound, which utilizes live streaming of their network of three hydrophones to allow anyone to listen in on the underwater world in real time.

“The app itself is trying to engage folks in acting on behalf of the whales—that’s its primary purpose—but it’s also trying to engage them as citizen scientists,” Veirs says. “So from a student’s perspective, maybe the most interesting thing to do is to use some of our learning resources to teach your students about what killer whales sound like and what other common sounds in the Salish Sea are, and then get them pressing the button.”

With so many talented scientists investing their energy into these AI projects, progress in the field of whale AI is set to grow exponentially.

“Over the many decades that this research has evolved to where we are now … I look at this and think, wow, in 10 years, what are we going to have? Because what’s changed in the past 10 years has been amazing,” Cottrell says. “The technology, the artificial intelligence, the programming … is just changing so rapidly. And to keep up with that and help the whales is so important.”

In addition to its benefits for whale research and protection, these hydrophone and AI technologies could be used to study other parts of the underwater ecosystem as well. As part of his PhD research, Xavier Muoy, a PhD student at the School of Earth and Ocean Sciences at the University of Victoria and an acoustician at JASCO Applied Sciences, is using hydrophone data to identify and monitor the sounds made by different fish species. This research could be particularly beneficial for fish that require conservation strategies, like rockfish.

“As I started to look at data from hydrophones that were collected along the coast … I could still find, pretty much everywhere, these very low frequency sounds that were super grunty and not from a marine mammal,” Muoy tells me. “I found out that those are fish, and so if we can hear fish in most of the hydrophones that we have along the coast, that means there’s also good potential to monitor fish just using their sounds.”

As this field of underwater AI continues to grow on the west coast, the many disparate projects are working to come together and collaborate—after all, the whales don’t recognize borders.

“Coast-wide, there’s a lot of hydrophones out there and they’re all in different networks,” Cottrell says. “And I think linking those in as well, is going to be important and I think a challenge going forward, but something that hopefully we can connect … and help the whales.”

[buzzsprout episode=’9415074′ player=’true’]