By Michael Carter-Arlt

ME: “Hey SIRI. What do you look like?”

When I was completing my master’s degree, I pondered this question a lot. If SIRI or any AI had a face, what would it look like?

SIRI: “I imagine I probably look like colourful sound waves.”

I believe that the future of artificial intelligence (AI) will have a face—or, more accurately, many faces. But, rather than a device or the company that makes it determining what an AI looks like (inevitably giving us 3D variations on an old Winamp visualizer), appearances will be determined and customized by individual users—and will be every bit as varied as the live human beings interacting with them.

In 2018, I demonstrated this idea in front of a class by creating the illusion of myself as an AI hologram. The set-up was simple—just a hacked CD jewel case and a cellphone video of my face, shot in a dark room with blue light—but the effect was convincing enough to capture the attention of my entire class, almost as if nothing else mattered but me and what I was presenting—as though I were the Wizard of Oz.

This experience made me realize just how useful holograms (or the illusion of them) could be in helping increase engagement and combat distractions in a classroom.

As is often the case, my curiosity eventually led me through the Looking Glass.

When we use the word hologram, we often associate it with movies like Minority Report or Star Wars, in which characters interact with free-standing, three-dimensional images made of projected light that are visible to the naked eye. But we still don’t really know if these kinds of holograms will ever become a reality. Since as long ago as the mid-1800s, people have been experimenting with numerous interpretations of so-called holograms. However, the examples we see in science fiction are technically not holograms—rather, they are 3D free-space volumetric images, which involve much more complicated technology than the would-be holograms that many people are familiar with from theme parks or music festivals.

The vast majority of these familiar “holographic” images are created using the Pepper’s Ghost illusion—the same trick I used to wow my class. John Henry Pepper’s technique, which he first achieved in 1862, simulates the effect of a hologram but is actually more like a clever parlour trick. Used in everything from Disney theme parks to news teleprompters, the illusion is usually created using a piece of glass that is angled to reflect an image displayed on a screen, so that a ghostlike reflection of the image appears on the glass. A simple version of this illusion can be created using a smartphone and a CD case, much like the one I used for my class demonstration. However, the same illusion can also be executed on a much grander scale. Notable examples from recent years include the hologram of the late Tupac Shakur, which performed with Dr. Dre and Snoop Dogg at the Coachella Music Festival in 2012, and Hatsune Miku, a software-generated pop star who is big in Japan and was scheduled to play the same festival in 2020.

These uses of Pepper’s Ghost are convincing, but in order to achieve what we see in the movies, there would need to be some way of manipulating beams of light to simulate actual objects. Currently, no such technology exists. There is, however, an alternative to sci-fi holograms, which utilizes technology that goes far beyond mere smoke and mirrors.

What is the Looking Glass?

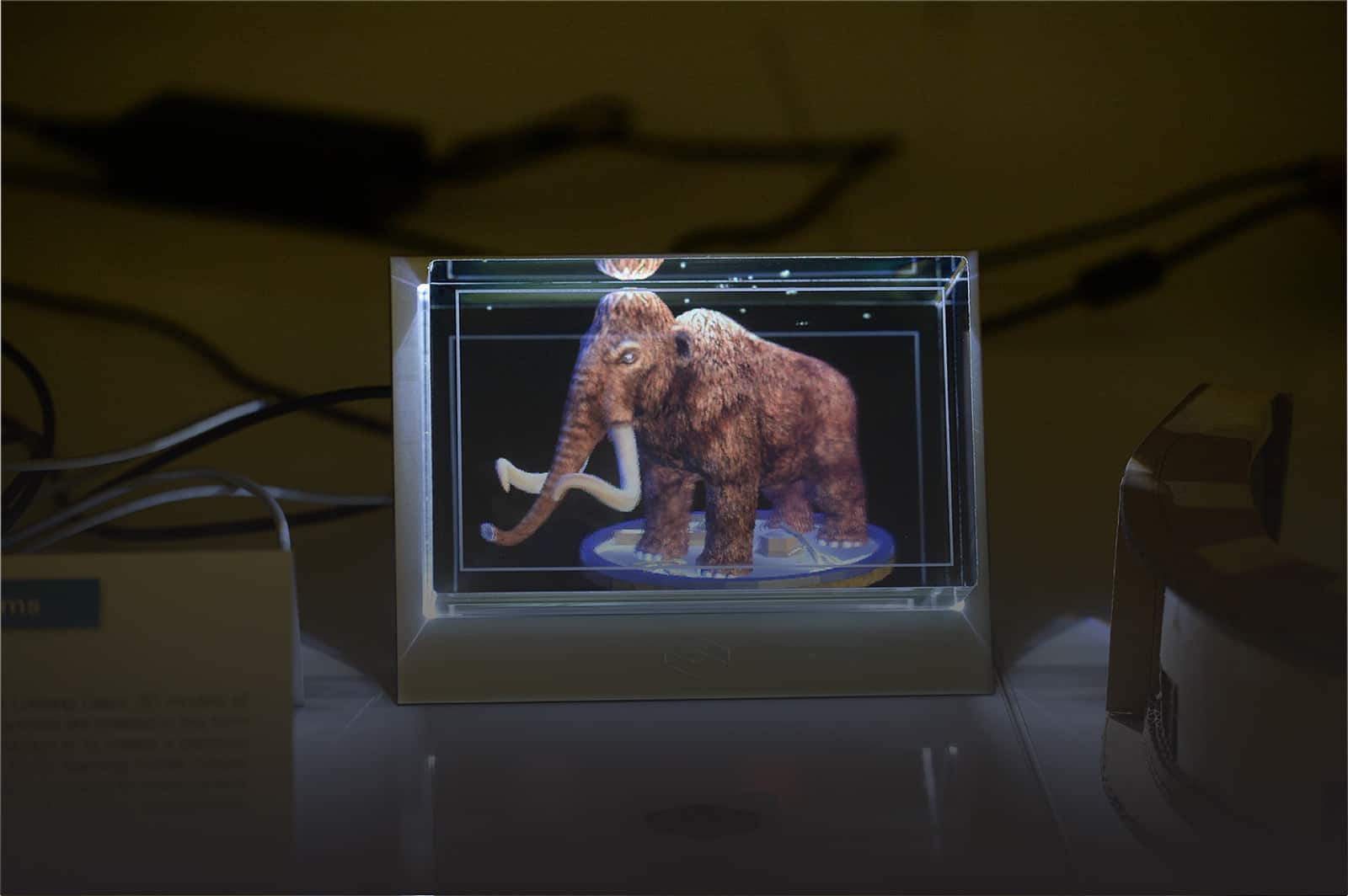

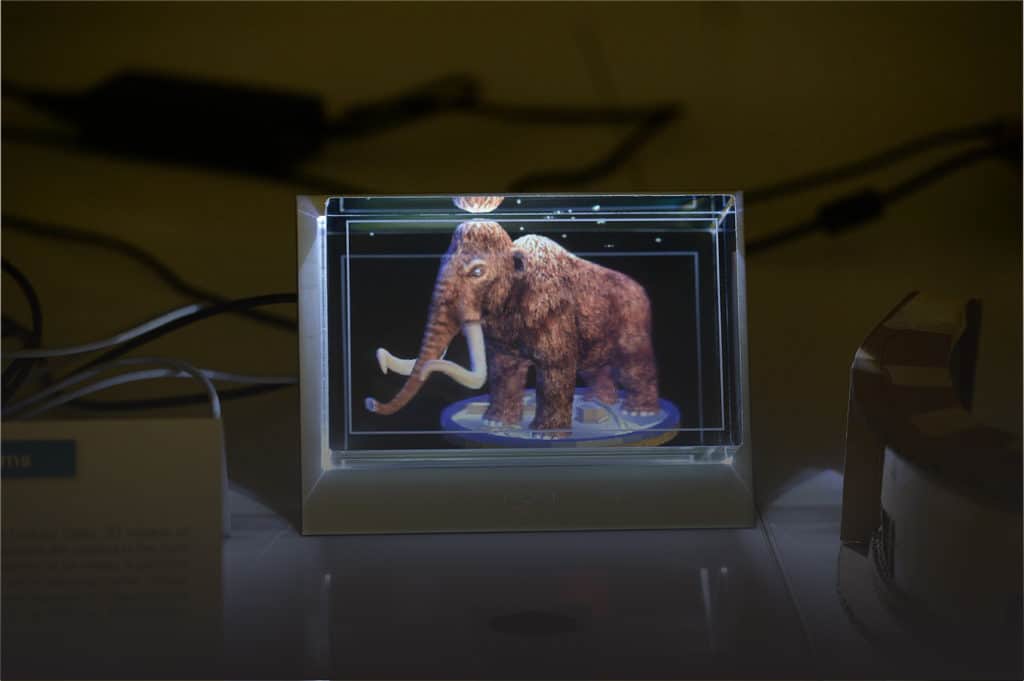

In 2018, the Looking Glass Factory launched the world’s first desktop holographic display development kit, known as the Looking Glass. Featuring a cubical glass display capable of rendering 3D objects that appear almost lifelike, it is the closest thing to those sci-fi holograms that is currently available.

Put simply, the Looking Glass allows 3D creators to import their images and project them onto a block of clear acrylic, giving the appearance of a display in a glass box.

The Looking Glass is powered by proprietary volumetric light field technology, which projects up to 100 perspectives of a single 3D image onto the acrylic using a lenticular lens—an array of lenses designed to magnify different images when viewed from different angles. (Think of the cards or stickers made of ridged plastic that used to come in cereal boxes, with images that “moved” when you tilted them back and forth.)

What this means is that, as the viewer moves around the Looking Glass, the perspective of the projected 3D environment in the display moves with them, offering different views of the 3D object and making it appear like a form in space, rather than a projection on a screen.

One of the key advantages of the Looking Glass compared to other forms of immersive technology, such as AR or VR, is that it doesn’t require headgear to view true 3D content. It can be used to view still images in 3D, 3D animations, and stereoscopic images, and it has support for Light Detection and Ranging (LiDAR), which uses lasers to scan objects and take digital measurements that can then be converted into images. In addition, the Looking Glass can integrate with popular game engines Unity and the Unreal Engine, allowing a wide community of 3D animators and game designers to create lenticular holographic content.

The Looking Glass Factory recently announced a new product called the Looking Glass Portrait, an even more accessible device that will allow anyone from casual photographers to advanced 3D modellers to render their content as lenticular holograms. In conjunction with the late 2020 release of the new iPhone 12 Pro, which has LiDAR functionality in its camera, this has the potential to prompt a bump in content creation for the Looking Glass—which, in turn, will help reduce the cost for educational institutions to use holographic displays, and could spawn a new generation of 3D hologram creators.

What is possible with the Looking Glass?

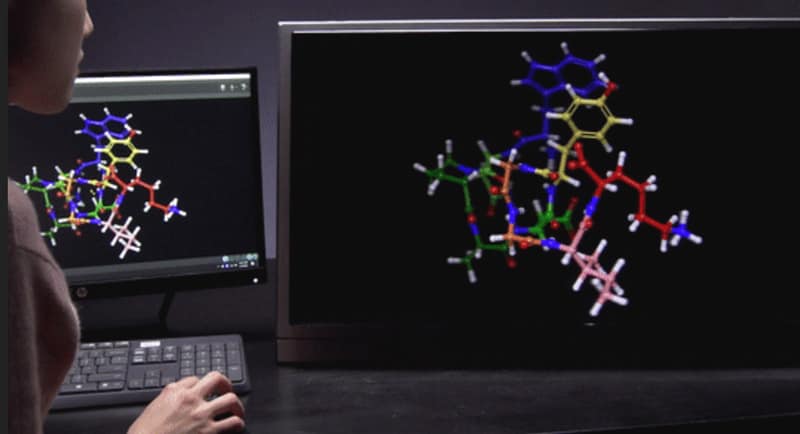

Since the first version of the Looking Glass was released in 2018, various industries have found their own ways to use the capabilities of holographic display. Schrödinger, a drug discovery and materials research company, incorporates the Looking Glass into its software workflow to enable teams of chemists to view complex 3D structures together, thus accelerating the discovery of medicines and materials. It has also been used in the medical field for cross-sectional imaging, and in engineering for visualizing 3D models of engines and other machines.

In October of 2019, filmmaker Tim Burton utilized the Looking Glass for his Lost Vegas exhibit at the Neon Museum in Las Vegas. Several displays were used to create a new form of exhibition, adding a layer of depth to never-before-seen, original artworks by Burton himself. As was the case with Schrödinger, the Looking Glass proved to be an effective tool for viewing 3D content not only for its immersive properties, but also due to its accessibility. For Lost Vegas, it provided a technological purpose as well as a functional one, eliminating the need to wear headgear or equipment.

Developing for the Looking Glass

I first experimented with Looking Glass Factory tools in 2017, before the development of the current Looking Glass, when the company’s flagship device was called the Holoplayer One. Today, it would be hard to find a Holoplayer One; most people other than Looking Glass Factory insiders won’t even know it existed. Ever since the creation of the Looking Glass in 2018, I have been using it for purposes related to research and education—particularly around how holographic displays can be incorporated into classrooms.

The project I worked most recently on that involves the Looking Glass was for the Remastered exhibition at the Aga Khan Museum in Toronto. The exhibit focuses on digitally re-imaging paintings by early Islamic masters, and utilizes the Looking Glass in a way very similar to that used for Burton’s Lost Vegas exhibit. Instead of creating 3D lenticular artworks from illustrations, however, the museum’s collection of oil paintings were used as a reference for digital 3D scenes. This was accomplished using a combination of software tools, including Adobe Photoshop for graphic design, Blender for 3D modelling, and Unity for texturing, lighting and 3D animation.

The 3D renderings for each of the artworks not only provides a modern take on classic artworks, but also provides additional educational and pedagogical context. Islamic artworks from many early manuscripts eschew the use of linear perspective, and their planar style presents challenges for the accurate rendering of architectural elements in space. Adapting the images for the Looking Glass allows the viewer to experience a sense of depth and volume that helps us understand the complexity of the structures depicted.

In the era of COVID-19, contactless interactions with digital media are more a necessity than a trend. This means technologies that require the user to wear or handle equipment could be hit hard in terms of educational adoption. VR headsets, for example, will likely have far less appeal than they once did, given that they come into physical contact with the face of the wearer, and are commonly shared among a number of people in a social setting. In the Remastered exhibit, the Looking Glass provides a way of interacting with a 3D scene without the need to wear or touch any additional equipment. This was already a consideration when developing the exhibit, since it also meant being able to offer an interaction with 3D content that did not need any prior explanation—a key consideration for accessibility with older audiences. It will no doubt become much more of a priority for many institutions after the COVID-19 pandemic.

Further Reading

The Looking Glass Factory provides an abundance of resources for learning more about its technology, and also how to develop content for it. In order to develop for the Looking Glass, it’s best to start with Unity or Unreal for creating 3D designs. Blender is also useful for 3D modelling. Although each program has its own level of complexity, each is user-friendly in its own way, and can be picked up easily once you learn the basics. (See Pinnguaq’s online resources on Blender.) On top of this, each program is free to use for hobbyists, making Looking Glass development more accessible to a much wider audience.

References

- Lam, K. Y. 03 October 2016. “The Hatsune Miku Phenomenon: More than a Virtual J-Pop Diva.” The Journal of Popular Culture – Wiley Online Library. ryerson.ca.

- Burdekin, R. 2015. “Pepper’s Ghost at the Opera.” Theatre Notebook, 69 (3):152-64.

- Rogers, S. 03 March 2020. “Coronavirus: Practical Hygiene Advice for Virtual Reality Users.” forbes.com.